The $3 AI Chip: How to Run TinyML on ESP8266 (No Cloud Required)

CONTENTS.log Table of Contents

Bill of Materials QTY: 5

* SYSTEM.NOTICE: Affiliate links support continued laboratory research.

Artificial Intelligence is usually associated with massive data centers, burning kilowatts of power, and costing thousands of dollars per hour. But what if I told you that you can run a Neural Network on a chip that costs less than a cup of coffee? And what if I told you it could run on a coin cell battery for months?

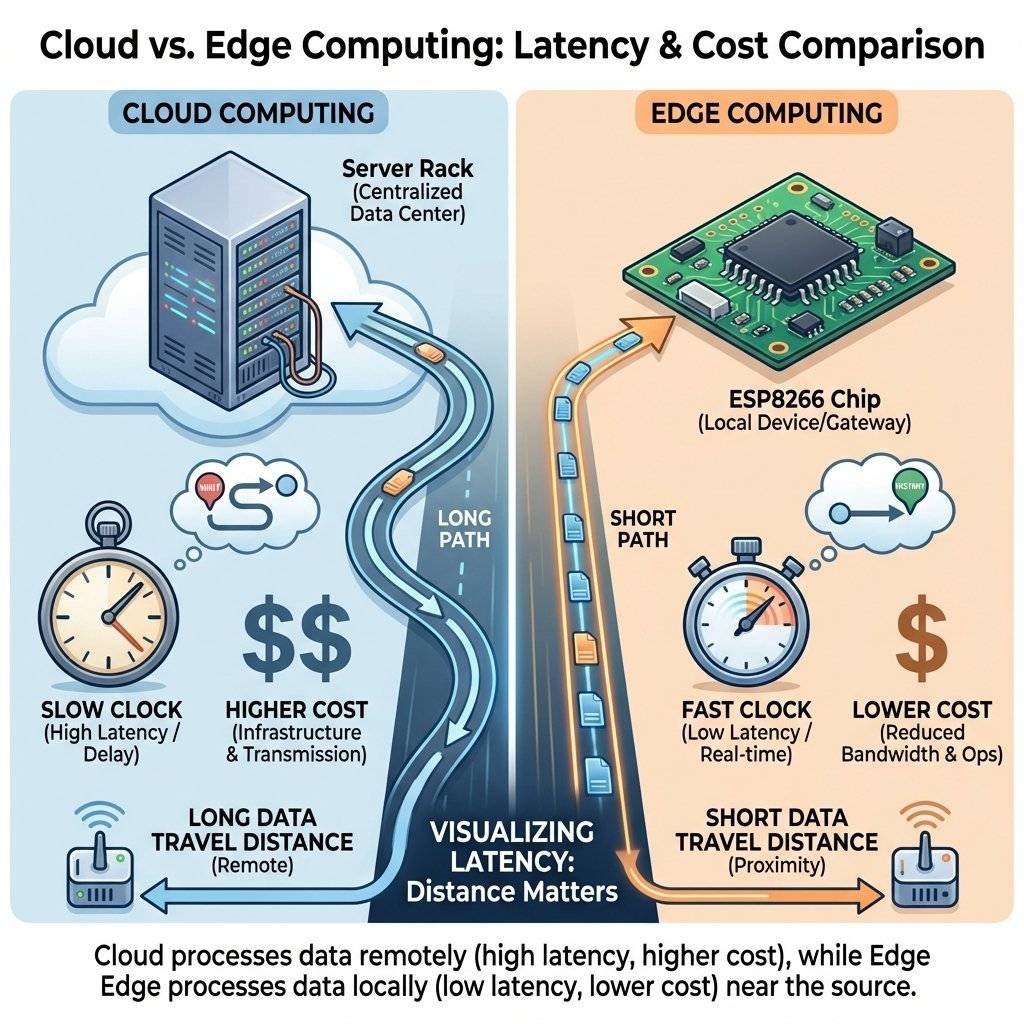

Welcome to TinyML. This is the frontier of “Edge Computing”. Instead of sending data to the Cloud (slow, insecure, expensive), we process it locally on the silicon. Today, we will turn your humble ESP8266 into a brain capable of recognizing gestures, words, or anomalies.

The Problem: The “Cloud” Disconnect

Why do we need TinyML?

- Latency: Decisions happen in milliseconds, not cloud round-trips.

- Bandwidth: Sending “Anomaly Detected” is cheaper than raw data.

- Privacy: Data stays on the device; no eavesdropping.

- Power: Computing is 10x cheaper than Wi-Fi transmission.

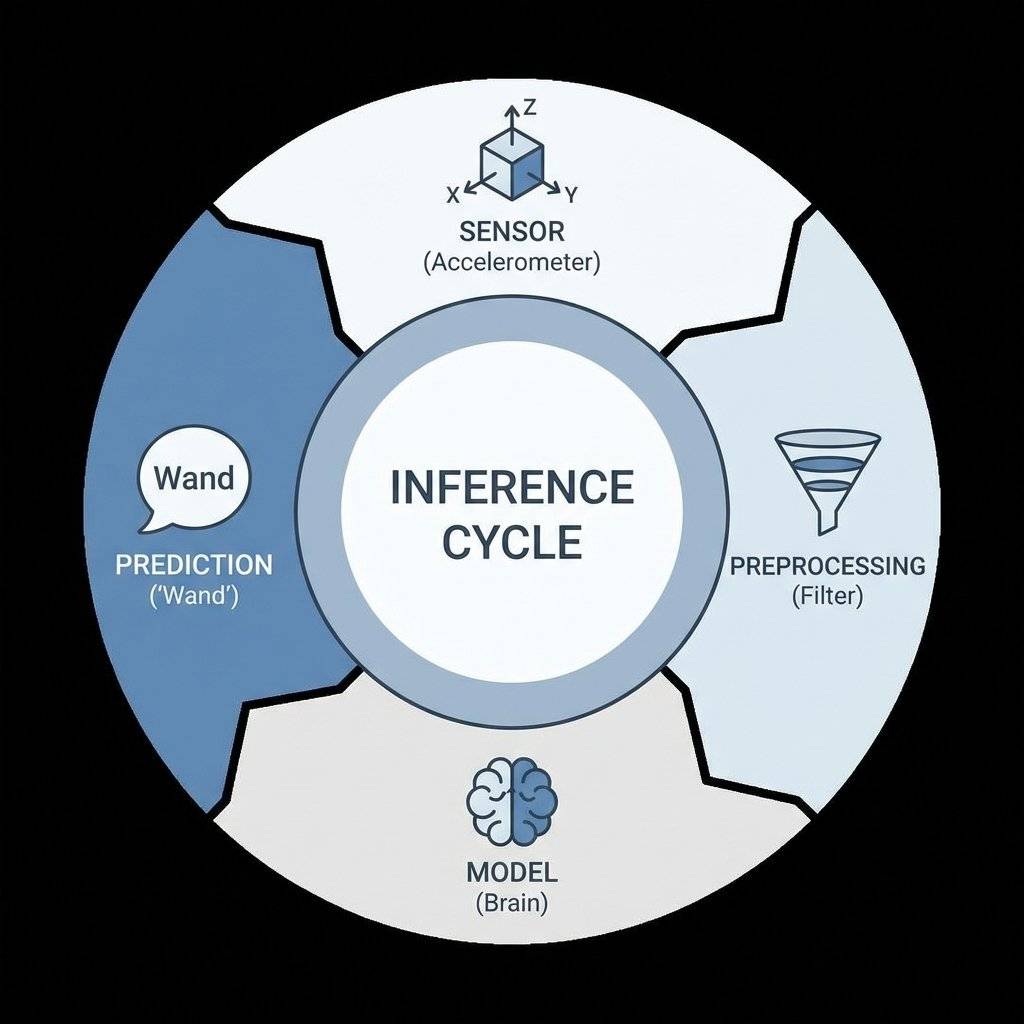

How it Works: The TinyML Pipeline

You cannot train a model on an ESP8266. It lacks the RAM (50kb vs 16GB). Instead, we use a pipeline:

- Capture Data: Record raw sensor data (e.g., gestures).

- Train Model: Learn patterns using powerful GPUs (Colab).

- Convert: Squash the model into a tiny “Lite” version.

- Deploy: Upload the C++ Byte Array to the ESP8266.

- Inference: The chip runs the math in real-time.

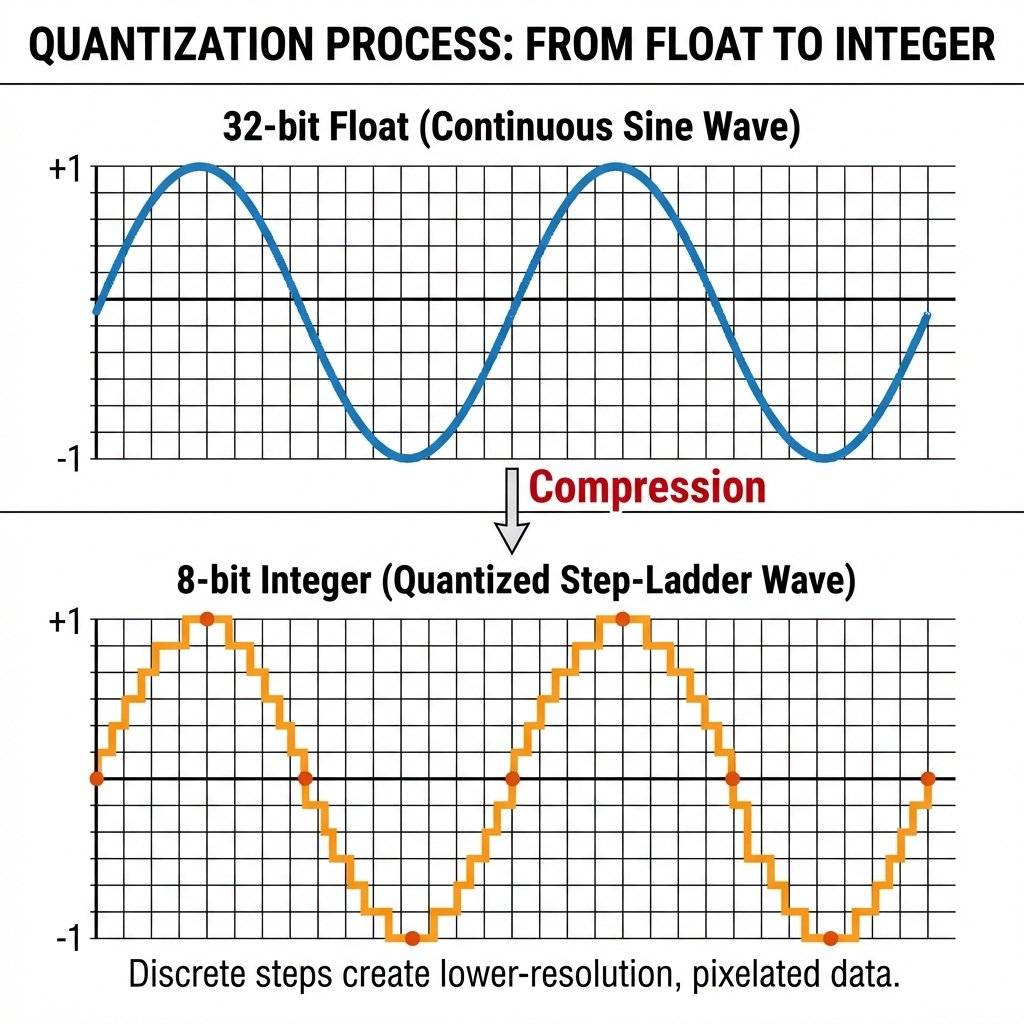

Technical Deep Dive: Quantization (Int8 vs Float32)

Standard Neural Networks use 32-bit Floating Point numbers (e.g., 0.156294).

- Problem: Floats are slow and take 4 bytes each.

- Solution: Quantization (mapping decimals to 8-bit integers).

-

Result: 4x smaller model; <1% accuracy drop.

-

This is how we fit a brain into 50kb of RAM.

Step 1: Data Collection (The Gesture)

We will build a “Magic Wand”.

- Hardware: ESP8266 + MPU6050 (Accelerometer).

- Goal: Recognize “Wingardium”, “Circle”, and “Strike”.

The Code (Data Logger): We need to sample the accelerometer at exactly 100Hz and print it to Serial.

#include <Adafruit_MPU6050.h>

#include <Adafruit_Sensor.h>

#include <Wire.h>

Adafruit_MPU6050 mpu;

void setup() {

Serial.begin(115200);

if (!mpu.begin()) {

while (1) yield();

}

mpu.setAccelerometerRange(MPU6050_RANGE_8_G);

mpu.setGyroRange(MPU6050_RANGE_500_DEG);

mpu.setFilterBandwidth(MPU6050_BAND_21_HZ);

}

void loop() {

sensors_event_t a, g, temp;

mpu.getEvent(&a, &g, &temp);

// Print raw data for Python to grab

Serial.print("DATA,");

Serial.print(a.acceleration.x, 3); Serial.print(",");

Serial.print(a.acceleration.y, 3); Serial.print(",");

Serial.print(a.acceleration.z, 3);

Serial.println();

delay(10); // Approx 100Hz

}1.1 The Code Explained: Why 100Hz?

You might notice delay(10). Neural Networks need consistent timing.

If you train your model on data sampled at 50Hz, but your wand runs at 100Hz, the gesture will look “slow motion” to the brain. It will fail.

- Consistency: Training and inference must use the same frequency.

- Baud Rate:

115200is required for high-speed data. - Range:

8Gprevents signal clipping during gestures.

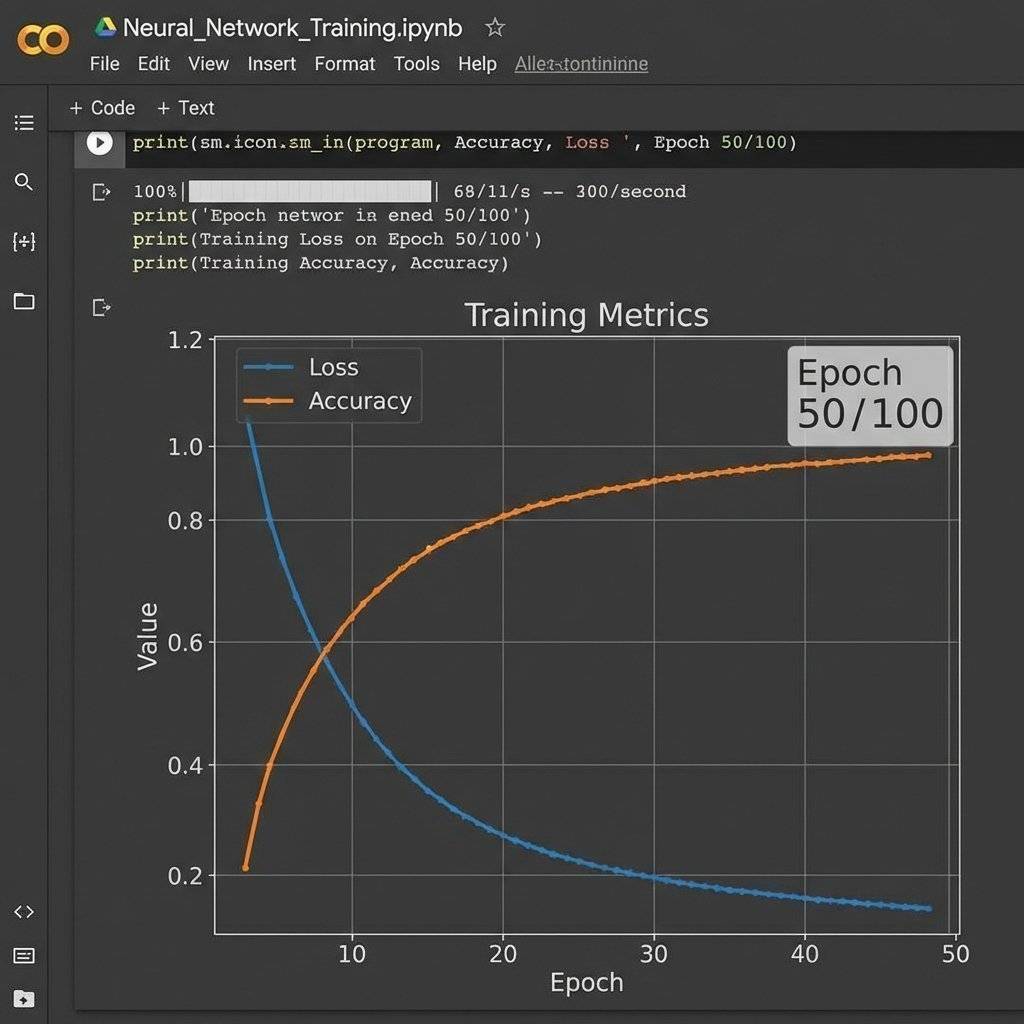

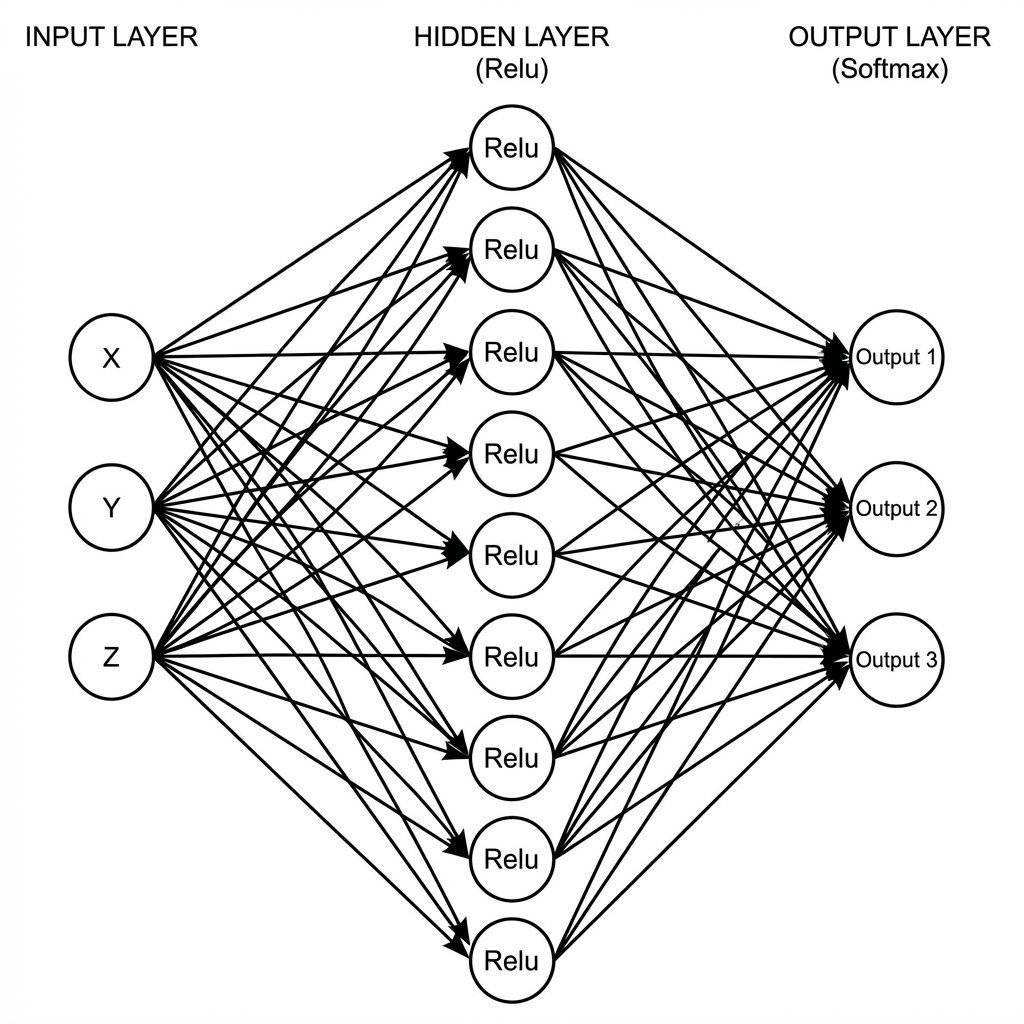

Step 2: Training in Google Colab

We don’t have a GPU. Google does. And they let us use it for free. We use TensorFlow Lite for Microcontrollers.

Key Python Concepts:

- Windowing: Slicing motion into 2-second segments.

- CNN: treating time-series data as an image.

- Softmax: Output probabilities that sum to 1.0.

The Training Graph: Watch the “Loss” curve. It should plummet like a stone. If it oscillates, your learning rate is too high.

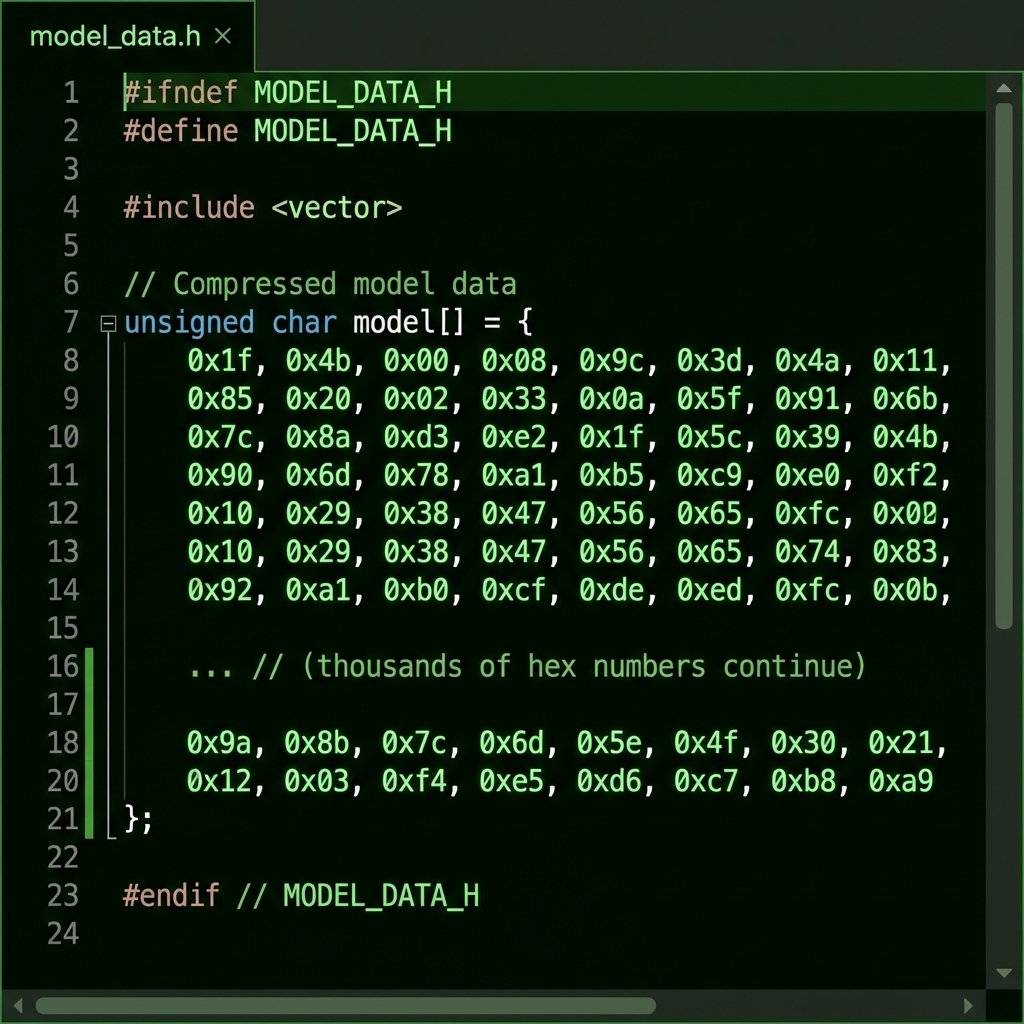

Step 3: The Conversion (xxd)

Once trained, we export a .tflite file.

But the ESP8266 IDE doesn’t know what a file is. It knows C++.

We run a command (on Linux/Mac):

xxd -i gesture_model.tflite > model.h

The Output:

A massive C array. This is your frozen brain.

// model.h

unsigned char gesture_model_tflite[] = {

0x1f, 0x00, 0x00, 0x00, 0x54, 0x46, 0x4c, 0x33, 0x00, 0x00, 0x00, 0x00,

0x14, 0x00, 0x20, 0x00, 0x04, 0x00, 0x08, 0x00, 0x0c, 0x00, 0x10, 0x00,

// ... 20,000 more bytes ...

};Understanding the Hex Dump (FlatBuffers)

You might wonder why we don’t just load the .tflite file from SPIFFS.

We could, but converting it to a C array allows the compiler to store it in PROGMEM (Flash Memory) easily.

The format isn’t random. It uses FlatBuffers.

- Zero-Copy: Access data directly without unpacking.

- RAM Efficiency: Minimal memory footprint compared to JSON.

Technical Deep Dive: The TensorFlow Lite Micro Architecture

How does Google fit TensorFlow into 50KB?

- No Dynamic Memory: All RAM is allocated upfront.

- Optimized Kernels: Includes only the operators you need.

- Static Graph: Execution plan is baked in at compile-time.

Variable Quantization

We talked about int8. But not all int8s are equal.

-

Symmetric: Mapping centered around zero.

-

Asymmetric: Uses a “Zero Point” offset.

-

Per-Channel: Different scaling factors per filter.

-

Why care? If your model accuracy sucks after conversion, switch from Asymmetric to Symmetric.

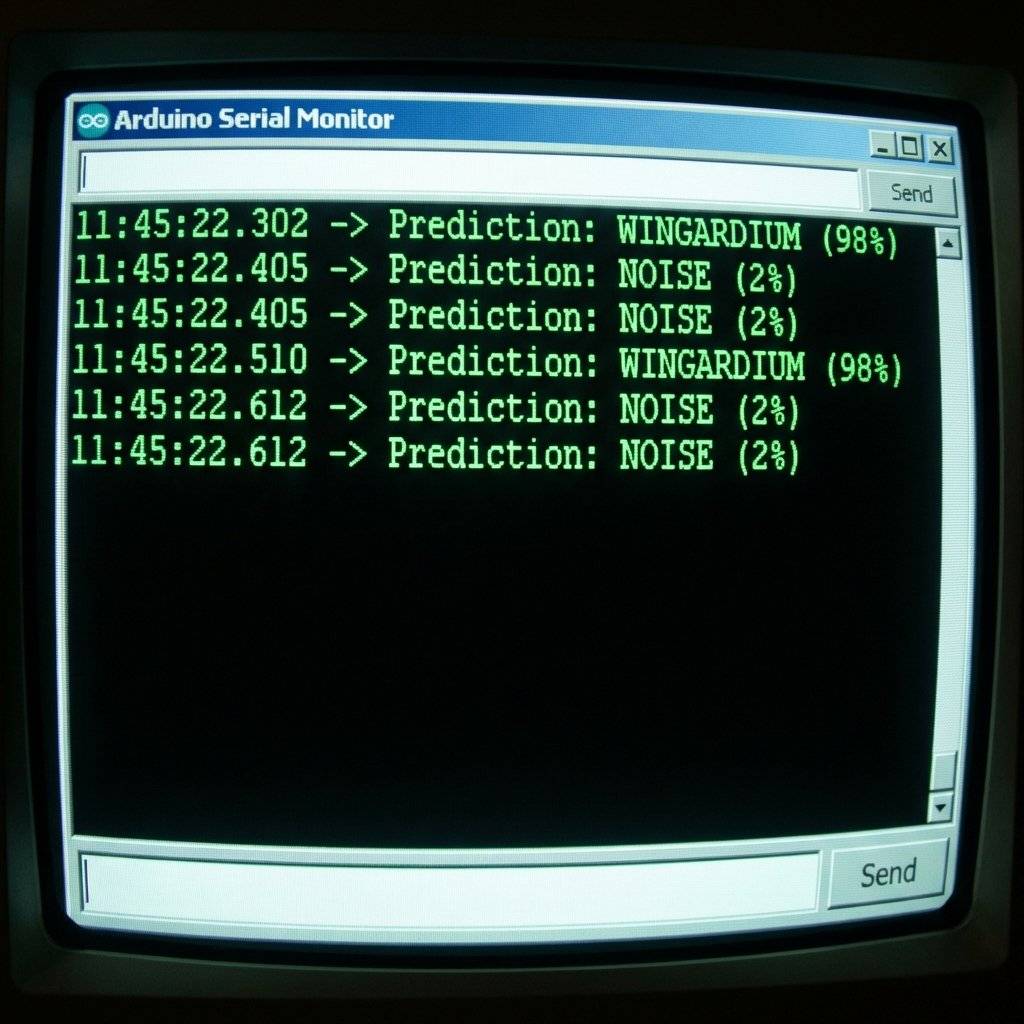

Step 4: Inference Engine (The ESP8266 Code)

This is the hardest part. Wiring TensorFlow into the Arduino environment.

We use the TensorFlowLite_ESP32 library (it works on 8266 mostly, or use EloquentTinyML wrapper for sanity).

The Interpreter: Think of the Interpreter as the “Player” for your model file.

- Interpreter: The “player” for your model file.

- Tensor Arena: Math scratchpad reserved in RAM.

- Invoke: The command that runs the neural network.

#include <EloquentTinyML.h>

#include "model.h"

#define NUMBER_OF_INPUTS 60 // 20 samples * 3 axes

#define NUMBER_OF_OUTPUTS 3 // Wingardium, Circle, Noise

#define TENSOR_ARENA_SIZE 2 * 1024

Eloquent::TinyML::TfLite<NUMBER_OF_INPUTS, NUMBER_OF_OUTPUTS, TENSOR_ARENA_SIZE> ml;

void setup() {

Serial.begin(115200);

ml.begin(gesture_model_tflite);

}

void loop() {

float input_data[NUMBER_OF_INPUTS] = { /* ... perform windowing here ... */ };

// THE MAGIC HAPPENS HERE

float prediction[NUMBER_OF_OUTPUTS] = ml.predict(input_data);

if (prediction[0] > 0.8) {

Serial.println("WINGARDIUM LEVIOSA!");

}

}

Architectural Considerations: Why not Raspberry Pi?

You might ask, “Why suffer with 50kb RAM? Just use a Pi Zero.”

- Boot Time: ESP8266 boots in 200ms; Pi takes 30s.

- Integrity: Microcontrollers are immune to power-loss corruption.

- Real-Time: Zero background OS jitter.

Common Pitfalls (The “Gotchas”)

1. The “Ghost” Inputs

Neural Networks are excellent interpolators but terrible extrapolators. If you train it on “Wave” and “Punch”, and then you do a “Clap”, it won’t say “Unknown”. It will say “Wave (51%)”. Fix: You must train a “Background Class” or “Noise Class” containing random movements.

2. Memory Fragmentation

The Tensor Arena must be a contiguous block of RAM. If you use String objects or heavy heap allocation elsewhere, you might fragment the RAM so much that the Arena can’t fit, even if total free RAM is high.

Fix: Allocate the Arena globally and static.

3. Sampling Jitter

If your training data was sampled at 99Hz, 101Hz, 100Hz… but your inference loop runs at exactly 100Hz, the shapes will be warped.

Fix: Use micros() to enforce strict timing loops.

Optimization Techniques: Squeezing the Lemon

Your model is too big? Too slow?

- Pruning: Removing near-zero connections.

- Depthwise Convolutions: Splitting math into cheaper ops. ().

- Clock Speed: Doubling CPU to 160MHz.

system_update_cpu_freq(160);

- This doubles your inference speed instantly (at the cost of heat).

The “Hello World” of AI: Sine Wave Approximation

Before you build the wand, try to predict a Sine Wave.

- Input: (Time).

Predicting a curve proves the toolchain works before messy sensors are involved.

Troubleshooting TinyML: When the Brain Fails

Deploying AI to a $3 chip is not seamless. Here is how to fix the common crashes.

1. The “Arena Exhausted” Panic

The most common error is TensorArena allocates X bytes but needs Y.

-

Cause: Your model is too fat, or your

TENSOR_ARENA_SIZEis too small. -

Fix: Increase the size in 1kb increments. If you hit the ESP8266 RAM limit (roughly 40kb usable for heap), you must simplify the model (fewer layers, fewer neurons).

2. The Shape Mismatch

Training says: Input shape: [1, 60].

Inference says: Invoke() failed.

-

Cause: You are feeding 59 floats or 61 floats.

-

Math: 100Hz for 2s = 200 samples. 3 axes 600 inputs.

-

Fix: Hardcode buffer sizes with

#define.

3. The Endianness Trap

You trained on x86 (Intel, Little Endian). You deploy to a microcontroller (Usually Little Endian, but some are Big Endian).

- TFLite Micro handles this: Mostly. But if you manually bit-bang weights, you will get garbage predictions. Always use the standard

xxdor Python conversion scripts.

Frequently Asked Questions (TinyML Edition)

Q: Can I do Voice Recognition on ESP8266? A: Barely. The “Micro Speech” demo requires a PDM microphone and heavy DSP (Fast Fourier Transform). The ESP8266 is a bit underpowered for real-time audio. Use an ESP32 (dual core) for “Ok Google” style keywords.

Q: How does this affect Battery Life? A: AI is expensive. Running the CPU at 160MHz drains the battery in hours.

- Strategy: Keep the ESP8266 in Deep Sleep. Use an external ultra-low-power accelerometer (like ADXL345) to detect “motion”. Only WAKE the ESP8266 when motion is detected to run the Inference.

Q: My model works in Colab but fails on the Chip! A: This is usually “Data Drift”. Your training data came from a clean dataset. Your real-world data is noisy.

- Solution: Collect “Validation Data” using the chip itself. Record yourself waving the wand and add THAT to the training set.

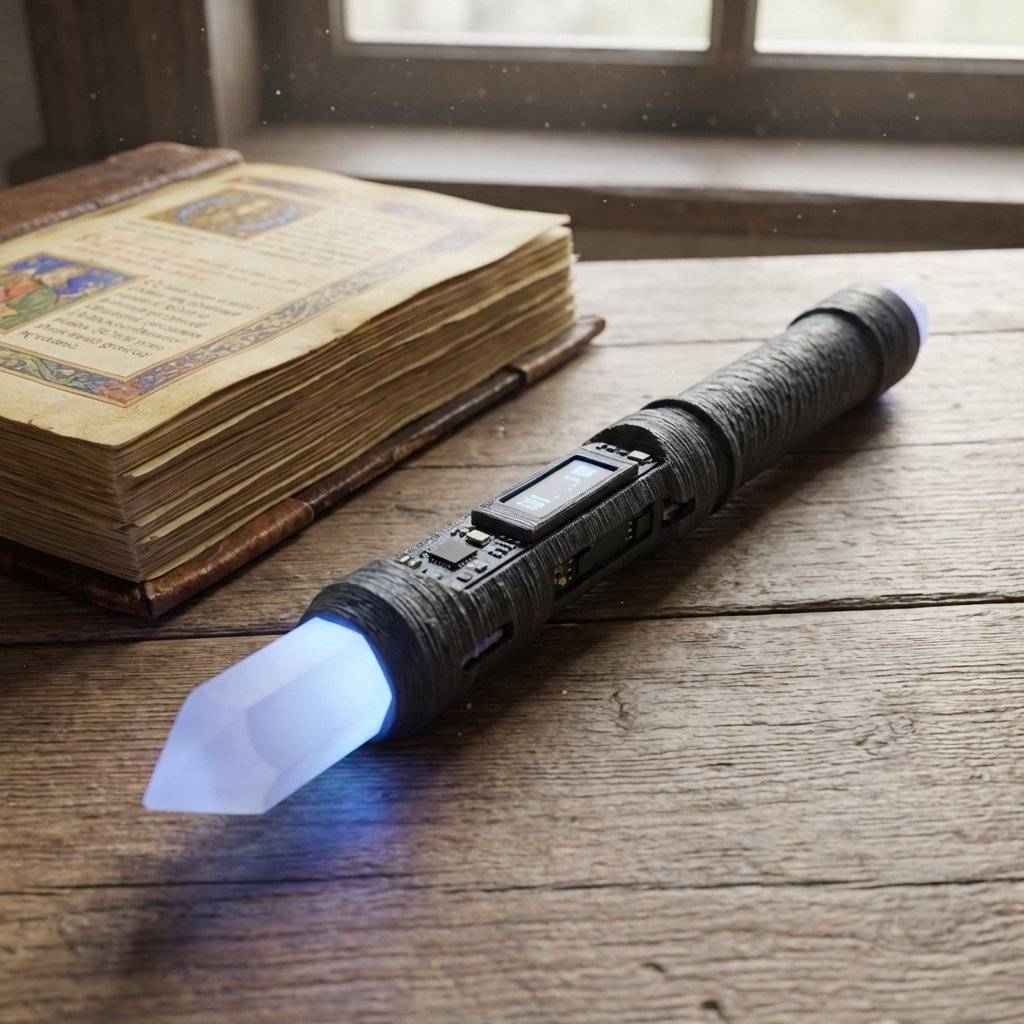

Final Project: The “Smart Wand”

Imagine a Harry Potter wand that actually controls your smart home.

- Gestures:

- Circle: Turn on Living Room Lights.

- Thrust: Lock the Front Door.

- Swipe Up: Open Blinds.

- Shake: Party Mode (Disco lights).

Hardware:

-

ESP8266 (WeMos D1 Mini) fits inside a PVC pipe.

-

MPU6050 Accelerometer taped to the tip.

-

LiPo Battery (18650) in the handle.

This is not Science Fiction. This is $10 of parts and an afternoon of coding. You have crossed the threshold from “Programmer” to “AI Engineer”.

Engineer’s Glossary (AI)

- Inference: Running a model to make a prediction.

- Quantization: Reducing precision to save memory (e.g., Float to Int8).

- Tensor: A multi-dimensional array of numbers.

- Tensor Arena: Scratchpad RAM for math.

- FlatBuffer: Zero-copy data format by Google.

- Epoch: One complete pass through the training data.

- Overfitting: Memorizing data instead of learning patterns.

- Loss: A measure of how “wrong” the model is.

Copyright © 2026 TechnoChips. Open Source Hardware (OSHW).